Matthias Klumpp: How to indicate device compatibility for your app in MetaInfo data

At the moment I am hard at work putting together the final bits for the AppStream 1.0 release (hopefully to be released this month). The new release comes with many new new features, an improved developer API and removal of most deprecated things (so it carefully breaks compatibility with very old data and the previous C API). One of the tasks for the upcoming 1.0 release was #481 asking about a formal way to distinguish Linux phone applications from desktop applications.

AppStream infamously does not support any is-for-phone label for software components, instead the decision whether something is compatible with a device is based the the device s capabilities and the component s requirements. This allows for truly adaptive applications to describe their requirements correctly, and does not lock us into form factors going into the future, as there are many and the feature range between a phone, a tablet and a tiny laptop is quite fluid.

Of course the match to current device capabilities check does not work if you are a website ranking phone compatibility. It also does not really work if you are a developer and want to know which devices your component / application will actually be considered compatible with. One goal for AppStream 1.0 is to have its library provide more complete building blocks to software centers. Instead of just a here s the data, interpret it according to the specification API, libappstream now interprets the specification for the application and provides API to handle most common operations like checking device compatibility. For developers, AppStream also now implements a few virtual chassis configurations , to roughly gauge which configurations a component may be compatible with.

To test the new code, I ran it against the large Debian and Flatpak repositories to check which applications are considered compatible with what chassis/device type already. The result was fairly disastrous, with many applications not specifying compatibility correctly (many do, but it s by far not the norm!). Which brings me to the actual topic of this blog post: Very few seem to really know how to mark an application compatible with certain screen sizes and inputs! This is most certainly a matter of incomplete guides and good templates, so maybe this post can help with that a bit:

The ultimate cheat-sheet to mark your app chassis-type compatible

As a quick reminder, compatibility is indicated using AppStream s relations system: A

The ultimate cheat-sheet to mark your app chassis-type compatible

As a quick reminder, compatibility is indicated using AppStream s relations system: A requires relation indicates that the system will not run at all or will run terribly if the requirement is not met. If the requirement is not met, it should not be installable on a system. A recommends relation means that it would be advantageous to have the recommended items, but it s not essential to run the application (it may run with a degraded experience without the recommended things though). And a supports relation means a given interface/device/control/etc. is supported by this application, but the application may work completely fine without it.

I have a desktop-only application

A desktop-only application is characterized by needing a larger screen to fit the application, and requiring a physical keyboard and accurate mouse input. This type is assumed by default if no capabilities are set for an application, but it s better to be explicit. This is the metadata you need:

<component type="desktop-application">

<id>org.example.desktopapp</id>

<name>DesktopApp</name>

[...]

<requires>

<display_length>768</display_length>

<control>keyboard</control>

<control>pointing</control>

</requires>

[...]

</component>

With this requires relation, you require a small-desktop sized screen (at least 768 device-independent pixels (dp) on its smallest edge) and require a keyboard and mouse to be present / connectable. Of course, if your application needs more minimum space, adjust the requirement accordingly. Note that if the requirement is not met, your application may not be offered for installation.

Note: Device-independent / logical pixels

One logical pixel (= device independent pixel) roughly corresponds to the visual angle of one pixel on a device with a pixel density of 96 dpi (for historical X11 reasons) and a distance from the observer of about 52 cm, making the physical pixel about 0.26 mm in size. When using logical pixels as unit, they might not always map to exact physical lengths as their exact size is defined by the device providing the display. They do however accurately depict the maximum amount of pixels that can be drawn in the depicted direction on the device s display space. AppStream always uses logical pixels when measuring lengths in pixels.

I have an application that works on mobile and on desktop / an adaptive app

Adaptive applications have fewer hard requirements, but a wide range of support for controls and screen sizes. For example, they support touch input, unlike desktop apps. An example MetaInfo snippet for these kind of apps may look like this:

<component type="desktop-application">

<id>org.example.adaptive_app</id>

<name>AdaptiveApp</name>

[...]

<requires>

<display_length>360</display_length>

</requires>

<supports>

<control>keyboard</control>

<control>pointing</control>

<control>touch</control>

</supports>

[...]

</component>

Unlike the pure desktop application, this adaptive application requires a much smaller lowest display edge length, and also supports touch input, in addition to keyboard and mouse/touchpad precision input.

I have a pure phone/table app

Making an application a pure phone application is tricky: We need to mark it as compatible with phones only, while not completely preventing its installation on non-phone devices (even though its UI is horrible, you may want to test the app, and software centers may allow its installation when requested explicitly even if they don t show it by default). This is how to achieve that result:

<component type="desktop-application">

<id>org.example.phoneapp</id>

<name>PhoneApp</name>

[...]

<requires>

<display_length>360</display_length>

</requires>

<recommends>

<display_length compare="lt">1280</display_length>

<control>touch</control>

</recommends>

[...]

</component>

We require a phone-sized display minimum edge size (adjust to a value that is fit for your app!), but then also recommend the screen to have a smaller edge size than a larger tablet/laptop, while also recommending touch input and not listing any support for keyboard and mouse.

Please note that this blog post is of course not a comprehensive guide, so if you want to dive deeper into what you can do with requires/recommends/suggests/supports, you may want to have a look at the relations tags described in the AppStream specification.

Validation

It is still easy to make mistakes with the system requirements metadata, which is why AppStream 1.0 will provide more commands to check MetaInfo files for system compatibility. Current pre-1.0 AppStream versions already have an is-satisfied command to check if the application is compatible with the currently running operating system:

:~$ appstreamcli is-satisfied ./org.example.adaptive_app.metainfo.xml

Relation check for: */*/*/org.example.adaptive_app/*

Requirements:

Unable to check display size: Can not read information without GUI toolkit access.

Recommendations:

No recommended items are set for this software.

Supported:

Physical keyboard found.

Physical keyboard found.

Pointing device (e.g. a mouse or touchpad) found.

This software supports touch input.

Pointing device (e.g. a mouse or touchpad) found.

This software supports touch input.

In addition to this command, AppStream 1.0 will introduce a new one as well: check-syscompat. This command will check the component against libappstream s mock system configurations that define a most common (whatever that is at the time) configuration for a respective chassis type.

If you pass the --details flag, you can even get an explanation why the component was considered or not considered for a specific chassis type:

:~$ appstreamcli check-syscompat --details ./org.example.phoneapp.metainfo.xml

Chassis compatibility check for: */*/*/org.example.phoneapp/*

Desktop:

Incompatible

recommends: This software recommends a display with its shortest edge

being << 1280 px in size, but the display of this device has 1280 px.

recommends: This software recommends a touch input device.

Laptop:

Incompatible

recommends: This software recommends a display with its shortest edge

being << 1280 px in size, but the display of this device has 1280 px.

recommends: This software recommends a touch input device.

Server:

Incompatible

requires: This software needs a display for graphical content.

recommends: This software needs a display for graphical content.

recommends: This software recommends a touch input device.

Tablet:

Compatible (100%)

Handset:

Compatible (100%)

Handset:

Compatible (100%)

Compatible (100%)

I hope this is helpful for people. Happy metadata writing!

<component type="desktop-application">

<id>org.example.desktopapp</id>

<name>DesktopApp</name>

[...]

<requires>

<display_length>768</display_length>

<control>keyboard</control>

<control>pointing</control>

</requires>

[...]

</component>

requires relation, you require a small-desktop sized screen (at least 768 device-independent pixels (dp) on its smallest edge) and require a keyboard and mouse to be present / connectable. Of course, if your application needs more minimum space, adjust the requirement accordingly. Note that if the requirement is not met, your application may not be offered for installation.

Note: Device-independent / logical pixels One logical pixel (= device independent pixel) roughly corresponds to the visual angle of one pixel on a device with a pixel density of 96 dpi (for historical X11 reasons) and a distance from the observer of about 52 cm, making the physical pixel about 0.26 mm in size. When using logical pixels as unit, they might not always map to exact physical lengths as their exact size is defined by the device providing the display. They do however accurately depict the maximum amount of pixels that can be drawn in the depicted direction on the device s display space. AppStream always uses logical pixels when measuring lengths in pixels.

I have an application that works on mobile and on desktop / an adaptive app

Adaptive applications have fewer hard requirements, but a wide range of support for controls and screen sizes. For example, they support touch input, unlike desktop apps. An example MetaInfo snippet for these kind of apps may look like this:

<component type="desktop-application">

<id>org.example.adaptive_app</id>

<name>AdaptiveApp</name>

[...]

<requires>

<display_length>360</display_length>

</requires>

<supports>

<control>keyboard</control>

<control>pointing</control>

<control>touch</control>

</supports>

[...]

</component>

Unlike the pure desktop application, this adaptive application requires a much smaller lowest display edge length, and also supports touch input, in addition to keyboard and mouse/touchpad precision input.

I have a pure phone/table app

Making an application a pure phone application is tricky: We need to mark it as compatible with phones only, while not completely preventing its installation on non-phone devices (even though its UI is horrible, you may want to test the app, and software centers may allow its installation when requested explicitly even if they don t show it by default). This is how to achieve that result:

<component type="desktop-application">

<id>org.example.phoneapp</id>

<name>PhoneApp</name>

[...]

<requires>

<display_length>360</display_length>

</requires>

<recommends>

<display_length compare="lt">1280</display_length>

<control>touch</control>

</recommends>

[...]

</component>

We require a phone-sized display minimum edge size (adjust to a value that is fit for your app!), but then also recommend the screen to have a smaller edge size than a larger tablet/laptop, while also recommending touch input and not listing any support for keyboard and mouse.

Please note that this blog post is of course not a comprehensive guide, so if you want to dive deeper into what you can do with requires/recommends/suggests/supports, you may want to have a look at the relations tags described in the AppStream specification.

Validation

It is still easy to make mistakes with the system requirements metadata, which is why AppStream 1.0 will provide more commands to check MetaInfo files for system compatibility. Current pre-1.0 AppStream versions already have an is-satisfied command to check if the application is compatible with the currently running operating system:

:~$ appstreamcli is-satisfied ./org.example.adaptive_app.metainfo.xml

Relation check for: */*/*/org.example.adaptive_app/*

Requirements:

Unable to check display size: Can not read information without GUI toolkit access.

Recommendations:

No recommended items are set for this software.

Supported:

Physical keyboard found.

Physical keyboard found.

Pointing device (e.g. a mouse or touchpad) found.

This software supports touch input.

Pointing device (e.g. a mouse or touchpad) found.

This software supports touch input.

In addition to this command, AppStream 1.0 will introduce a new one as well: check-syscompat. This command will check the component against libappstream s mock system configurations that define a most common (whatever that is at the time) configuration for a respective chassis type.

If you pass the --details flag, you can even get an explanation why the component was considered or not considered for a specific chassis type:

:~$ appstreamcli check-syscompat --details ./org.example.phoneapp.metainfo.xml

Chassis compatibility check for: */*/*/org.example.phoneapp/*

Desktop:

Incompatible

recommends: This software recommends a display with its shortest edge

being << 1280 px in size, but the display of this device has 1280 px.

recommends: This software recommends a touch input device.

Laptop:

Incompatible

recommends: This software recommends a display with its shortest edge

being << 1280 px in size, but the display of this device has 1280 px.

recommends: This software recommends a touch input device.

Server:

Incompatible

requires: This software needs a display for graphical content.

recommends: This software needs a display for graphical content.

recommends: This software recommends a touch input device.

Tablet:

Compatible (100%)

Handset:

Compatible (100%)

Handset:

Compatible (100%)

Compatible (100%)

I hope this is helpful for people. Happy metadata writing!

<component type="desktop-application">

<id>org.example.adaptive_app</id>

<name>AdaptiveApp</name>

[...]

<requires>

<display_length>360</display_length>

</requires>

<supports>

<control>keyboard</control>

<control>pointing</control>

<control>touch</control>

</supports>

[...]

</component>

<component type="desktop-application">

<id>org.example.phoneapp</id>

<name>PhoneApp</name>

[...]

<requires>

<display_length>360</display_length>

</requires>

<recommends>

<display_length compare="lt">1280</display_length>

<control>touch</control>

</recommends>

[...]

</component>

requires/recommends/suggests/supports, you may want to have a look at the relations tags described in the AppStream specification.

Validation

It is still easy to make mistakes with the system requirements metadata, which is why AppStream 1.0 will provide more commands to check MetaInfo files for system compatibility. Current pre-1.0 AppStream versions already have an is-satisfied command to check if the application is compatible with the currently running operating system:

:~$ appstreamcli is-satisfied ./org.example.adaptive_app.metainfo.xml

Relation check for: */*/*/org.example.adaptive_app/*

Requirements:

Unable to check display size: Can not read information without GUI toolkit access.

Recommendations:

No recommended items are set for this software.

Supported:

Physical keyboard found.

Physical keyboard found.

Pointing device (e.g. a mouse or touchpad) found.

This software supports touch input.

Pointing device (e.g. a mouse or touchpad) found.

This software supports touch input.

In addition to this command, AppStream 1.0 will introduce a new one as well: check-syscompat. This command will check the component against libappstream s mock system configurations that define a most common (whatever that is at the time) configuration for a respective chassis type.

If you pass the --details flag, you can even get an explanation why the component was considered or not considered for a specific chassis type:

:~$ appstreamcli check-syscompat --details ./org.example.phoneapp.metainfo.xml

Chassis compatibility check for: */*/*/org.example.phoneapp/*

Desktop:

Incompatible

recommends: This software recommends a display with its shortest edge

being << 1280 px in size, but the display of this device has 1280 px.

recommends: This software recommends a touch input device.

Laptop:

Incompatible

recommends: This software recommends a display with its shortest edge

being << 1280 px in size, but the display of this device has 1280 px.

recommends: This software recommends a touch input device.

Server:

Incompatible

requires: This software needs a display for graphical content.

recommends: This software needs a display for graphical content.

recommends: This software recommends a touch input device.

Tablet:

Compatible (100%)

Handset:

Compatible (100%)

Handset:

Compatible (100%)

Compatible (100%)

I hope this is helpful for people. Happy metadata writing!

Physical keyboard found.

Physical keyboard found.

Pointing device (e.g. a mouse or touchpad) found.

This software supports touch input.

Pointing device (e.g. a mouse or touchpad) found.

This software supports touch input. Compatible (100%)

Handset:

Compatible (100%)

Handset:

Compatible (100%)

Compatible (100%) KDE Plasma 6

KDE Plasma 6 The new 7945HX CPU from AMD is currently the most powerful. I d love to have one of them, to replace the now aging 6 core Xeon that I ve been using for more than 5 years. So, I ve been searching for a laptop with that CPU.

Absolutely all of the laptops I found with this CPU also embed a very powerful RTX 40 0 series GPU, that I have no use: I don t play games, and I don t do AI. I just want something that builds Debian packages fast (like Ceph, that takes more than 1h to build for me ). The more cores I get, the faster all OpenStack unit tests are running too (stestr does a moderately good job at spreading the tests to all cores). That d be ok if I had to pay more for a GPU that I don t need, and I would have deal with the annoyance of the NVidia driver, if only I could find something with a correct size. But I can only find 16 or bigger laptops, that wont fit in my scooter back case (most of the time, these laptops have an 17 inch screen: that s a way too big).

Currently, I found:

The new 7945HX CPU from AMD is currently the most powerful. I d love to have one of them, to replace the now aging 6 core Xeon that I ve been using for more than 5 years. So, I ve been searching for a laptop with that CPU.

Absolutely all of the laptops I found with this CPU also embed a very powerful RTX 40 0 series GPU, that I have no use: I don t play games, and I don t do AI. I just want something that builds Debian packages fast (like Ceph, that takes more than 1h to build for me ). The more cores I get, the faster all OpenStack unit tests are running too (stestr does a moderately good job at spreading the tests to all cores). That d be ok if I had to pay more for a GPU that I don t need, and I would have deal with the annoyance of the NVidia driver, if only I could find something with a correct size. But I can only find 16 or bigger laptops, that wont fit in my scooter back case (most of the time, these laptops have an 17 inch screen: that s a way too big).

Currently, I found:

QNAP TS-453mini product photo

QNAP TS-453mini product photo The logo for QNAP HappyGet 2 and Blizzard s StarCraft 2 side by side

The logo for QNAP HappyGet 2 and Blizzard s StarCraft 2 side by side Thermalright AXP120-X67, AMD Ryzen 5 PRO 5650GE, ASRock Rack X570D4I-2T, all assembled and running on a flat surface

Thermalright AXP120-X67, AMD Ryzen 5 PRO 5650GE, ASRock Rack X570D4I-2T, all assembled and running on a flat surface Memtest86 showing test progress, taken from IPMI remote control window

Memtest86 showing test progress, taken from IPMI remote control window Screenshot of PCIe 16x slot bifurcation options in UEFI settings, taken from IPMI remote control window

Screenshot of PCIe 16x slot bifurcation options in UEFI settings, taken from IPMI remote control window Internal image of Silverstone CS280 NAS build. Image stolen from

Internal image of Silverstone CS280 NAS build. Image stolen from  Internal image of Silverstone CS280 NAS build. Image stolen from

Internal image of Silverstone CS280 NAS build. Image stolen from  NAS build in Silverstone SUGO 14, mid build, panels removed

NAS build in Silverstone SUGO 14, mid build, panels removed Silverstone SUGO 14 from the front, with hot swap bay installed

Silverstone SUGO 14 from the front, with hot swap bay installed Storage SSD loaded into hot swap sled

Storage SSD loaded into hot swap sled TrueNAS Dashboard screenshot in browser window

TrueNAS Dashboard screenshot in browser window The final system, powered up

The final system, powered up Some time ago, I decided to make a small banner with the

Some time ago, I decided to make a small banner with the  So, with the encouragement of a few friends who were in the secret, this happened. In two copies, because the first attempt at the print had issues.

And yesterday we finally met that friend again, gave him all of the banners, and no violence happened, but he liked them :D

So, with the encouragement of a few friends who were in the secret, this happened. In two copies, because the first attempt at the print had issues.

And yesterday we finally met that friend again, gave him all of the banners, and no violence happened, but he liked them :D

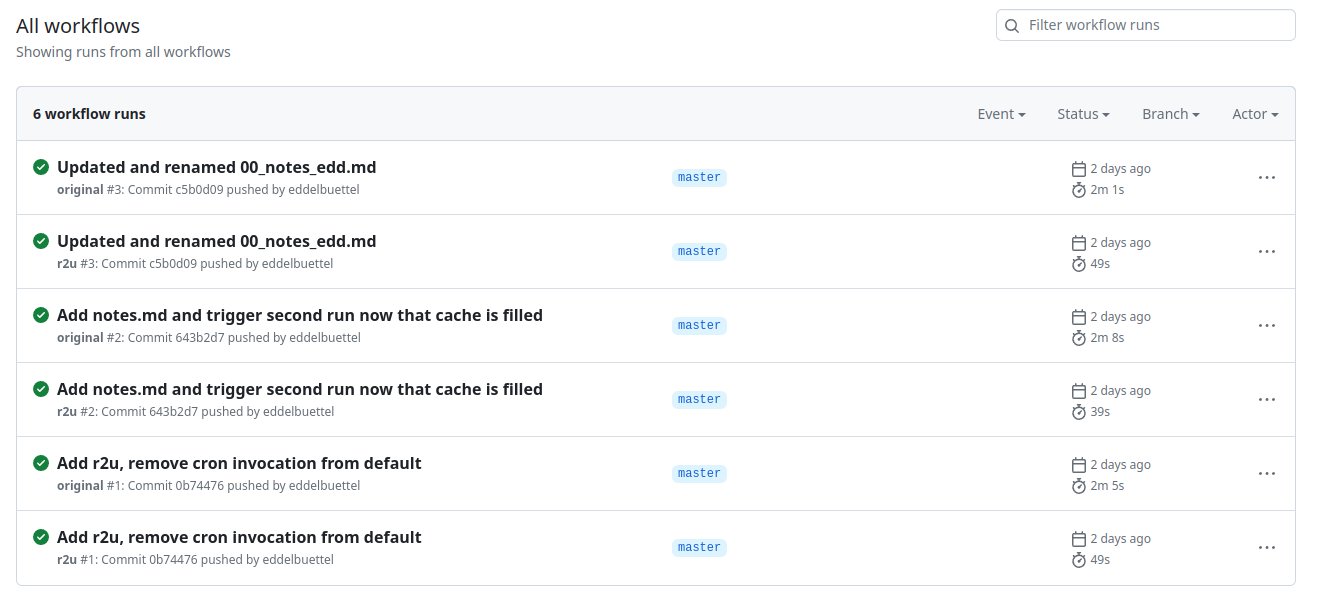

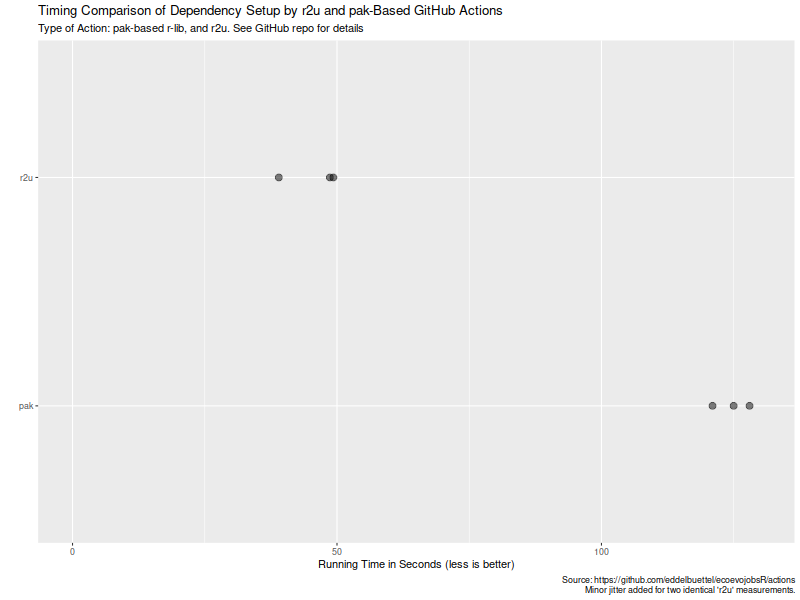

Welcome to the 43th post in the

Welcome to the 43th post in the  Turns out maybe not so much (yet ?). As the

Turns out maybe not so much (yet ?). As the  Now, this is of course entirely possibly that not all possible venues

for speedups were exploited in how the action setup was setup. If so,

please file an issue at the

Now, this is of course entirely possibly that not all possible venues

for speedups were exploited in how the action setup was setup. If so,

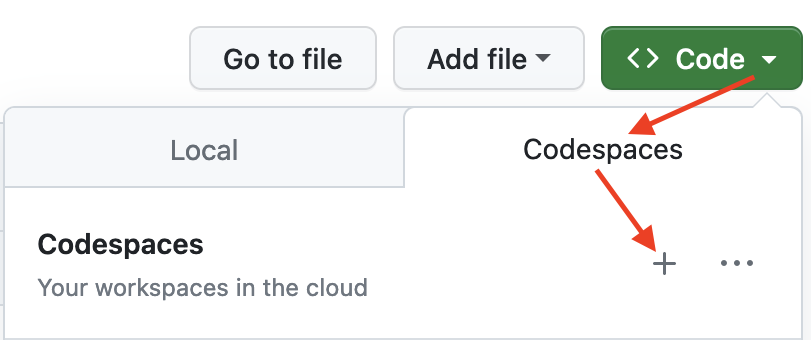

please file an issue at the  The first time you do this, it will open up a new browser tab where

your Codespace is being instantiated. This first-time instantiation

will take a few minutes (feel free to click View logs to see how

things are progressing) so please be patient. Once built, your Codespace

will deploy almost immediately when you use it again in the future.

The first time you do this, it will open up a new browser tab where

your Codespace is being instantiated. This first-time instantiation

will take a few minutes (feel free to click View logs to see how

things are progressing) so please be patient. Once built, your Codespace

will deploy almost immediately when you use it again in the future.

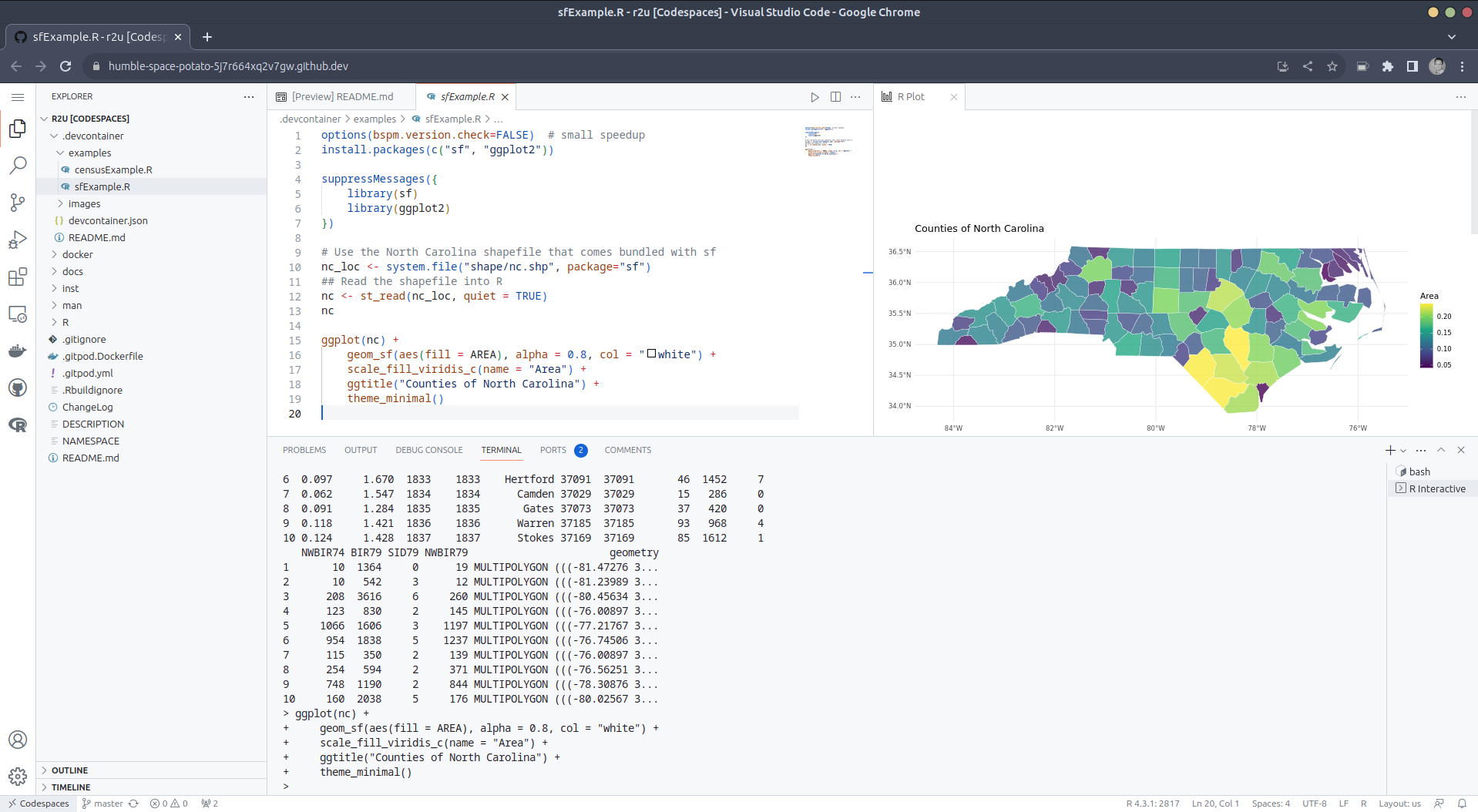

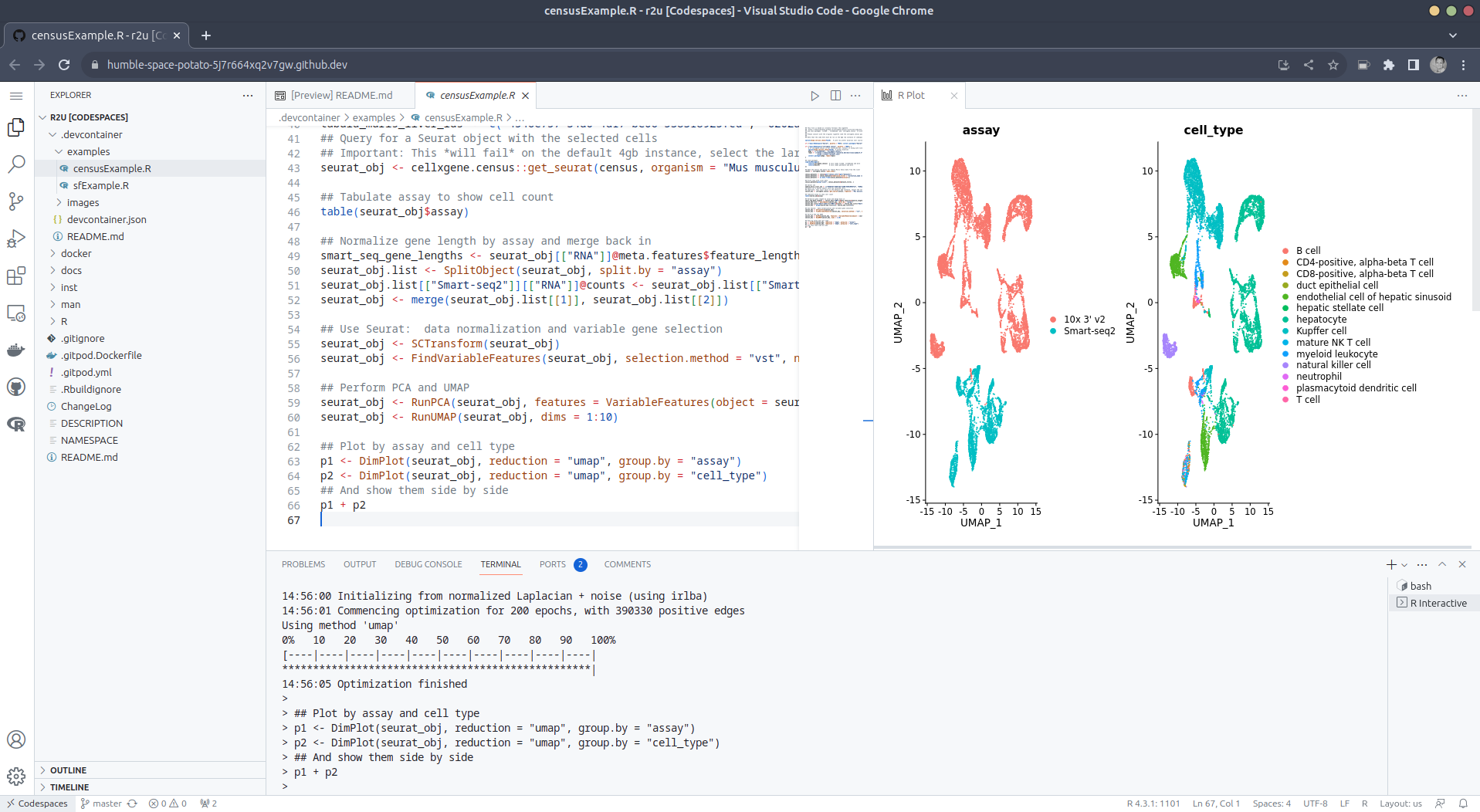

After the VS Code editor opens up in your browser, feel free to open

up the

After the VS Code editor opens up in your browser, feel free to open

up the  (Both example screenshots reflect the initial

(Both example screenshots reflect the initial

I've never been a fan of IoT devices for obvious reasons: not only do they tend

to be excellent at being expensive vendor locked-in machines, but far too often,

they also end up turning into e-waste after a short amount of time.

Manufacturers can go out of business or simply decide to

I've never been a fan of IoT devices for obvious reasons: not only do they tend

to be excellent at being expensive vendor locked-in machines, but far too often,

they also end up turning into e-waste after a short amount of time.

Manufacturers can go out of business or simply decide to

A real sad state of affairs

A real sad state of affairs